RC-MVSNet: Unsupervised Multi-View Stereo with Neural Rendering

Oct 23, 2022·,,, ,,·

0 min read

,,·

0 min read

Di Chang

Aljaz Bozic

Tong Zhang

Qingsong Yan

Ying-Cong Chen

Sabine Susstrunk

Matthias Niebner

Abstract

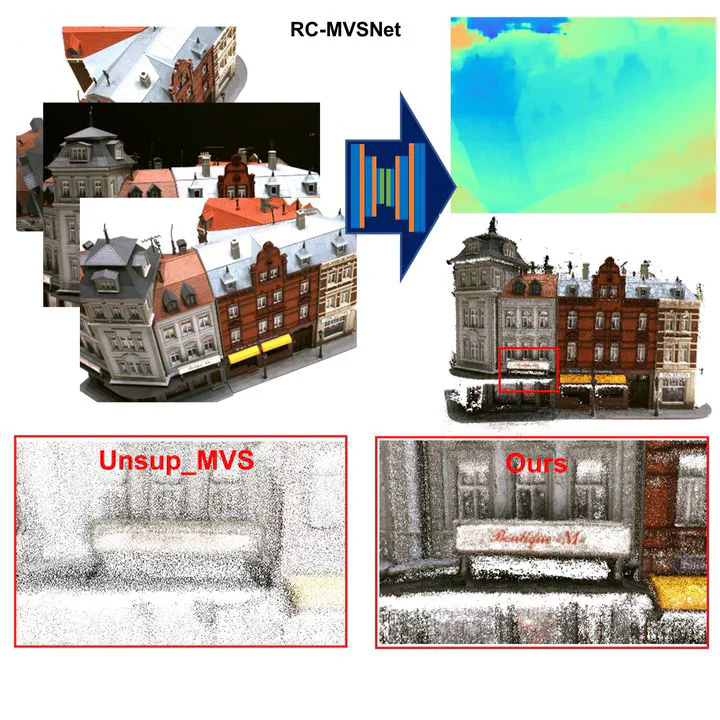

Finding accurate correspondences among different views is the Achilles heel of unsupervised Multi-View Stereo (MVS). Existing methods are built upon the assumption that corresponding pixels share similar photometric features. However, multi-view images in real scenarios observe non-Lambertian surfaces and experience occlusions. In this work, we propose a novel approach with neural rendering (RC-MVSNet) to solve such ambiguity issues of correspondences among views. Specifically, we impose a depth rendering consistency loss to constrain the geometry features close to the object surface to alleviate occlusions. Concurrently, we introduce a reference view synthesis loss to generate consistent supervision, even for non-Lambertian surfaces. Extensive experiments on DTU and Tanks&Temples benchmarks demonstrate that our RC-MVSNet approach achieves state-of-the-art performance over unsupervised MVS frameworks and competitive performance to many supervised methods.

Type

Publication

Proceedings of the European conference on computer vision (ECCV)