An asymmetric distance model for cross-view feature mapping in person reidentification

Jan 1, 2016· ,,,·

0 min read

,,,·

0 min read

Ying-Cong Chen

Wei-Shi Zheng

Jian-Huang Lai

Pong C Yuen

Abstract

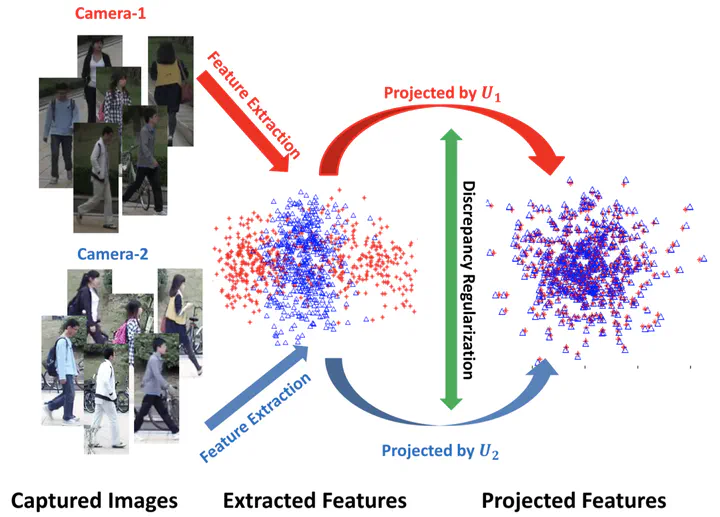

Person reidentification, which matches person images of the same identity across nonoverlapping camera views, becomes an important component for cross-camera-view activity analysis. Most (if not all) person reidentification algorithms are designed based on appearance features. However, appearance features are not stable across nonoverlapping camera views under dramatic lighting change, and those algorithms assume that two cross-view images of the same person can be well represented either by exploring robust and invariant features or by learning matching distance. Such an assumption ignores the nature that images are captured under different camera views with different camera characteristics and environments, and thus, mostly there exists large discrepancy between the extracted features under different views. To solve this problem, we formulate an asymmetric distance model for learning camera-specific projections to transform the unmatched features of each view into a common space where discriminative features across view space are extracted. A cross-view consistency regularization is further introduced to model the correlation between view-specific feature transformations of different camera views, which reflects their nature relations and plays a significant role in avoiding overfitting. A kernel cross-view discriminant component analysis is also presented. Extensive experiments have been conducted to show that asymmetric distance modeling is important for person reidentification, which matches the concerns on cross-disjoint-view matching, reporting superior performance compared with related distance learning methods on six publically available data sets.

Type

Publication

In IEEE transactions on circuits and systems for video technology