Mirror representation for modeling view-specific transform in person re-identification

Abstract

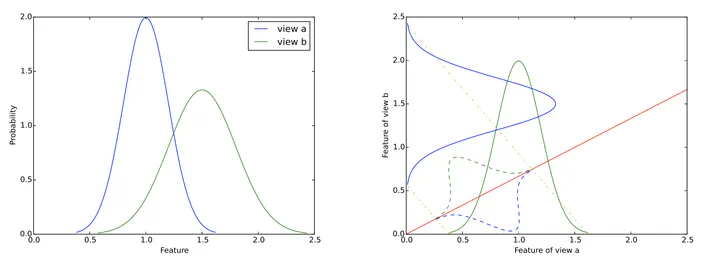

Person re-identification concerns the matching of pedestrians across disjoint camera views. Due to the changes of viewpoints, lighting conditions and camera features, images of the same person from different views always appear differently, and thus feature representations across disjoint camera views of the same person follow different distributions. In this work, we propose an effective, low cost and easy-to-apply schema called the Mirror Representation, which embeds the view-specific feature transformation and enables alignment of the feature distributions across disjoint views for the same person. The proposed Mirror Representation is also designed to explicitly model the relation between different view-specific transformations and meanwhile control their discrepancy. With our Mirror Representation, we can enhance existing subspace/ metric learning models significantly, and we particularly show that kernel marginal fisher analysis significantly outperforms the current state-of-the-art methods through extensive experiments on VIPeR, PRID450S and CUHK01.

Type

Publication

Proceedings of the International Conference on Artificial Intelligence